- Partner Grow

- Posts

- Z.ai: IPO Breakdown

Z.ai: IPO Breakdown

Z.ai IPO Deep Dive

👋 Hi, it’s Rohit Malhotra and welcome to the FREE edition of Partner Growth Newsletter, my weekly newsletter doing deep dives into the fastest-growing startups and S1 briefs. Subscribe to join readers who get Partner Growth delivered to their inbox every Wednesday morning.

Latest posts

If you’re new, not yet a subscriber, or just plain missed it, here are some of our recent editions.

Partners

Hiring in 8 countries shouldn't require 8 different processes

This guide from Deel breaks down how to build one global hiring system. You’ll learn about assessment frameworks that scale, how to do headcount planning across regions, and even intake processes that work everywhere. As HR pros know, hiring in one country is hard enough. So let this free global hiring guide give you the tools you need to avoid global hiring headaches.

Interested in sponsoring these emails? See our partnership options here.

Subscribe to the Life Self Mastery podcast, which guides you on getting funding and allowing your business to grow rocketship.

Previous guests include Guy Kawasaki, Brad Feld, James Clear, Nick Huber, Shu Nyatta and 350+ incredible guests.

S1 Deep Dive

Z.ai in one minute

Zhipu AI is building the foundational infrastructure for artificial general intelligence in China—developing proprietary large language models that power enterprise applications, consumer products, and developer ecosystems at scale. In a market where most AI companies rely on derivative architectures and imported frameworks, Zhipu delivers end-to-end model development from pre-training through commercialization, establishing technological independence while achieving rapid revenue growth.

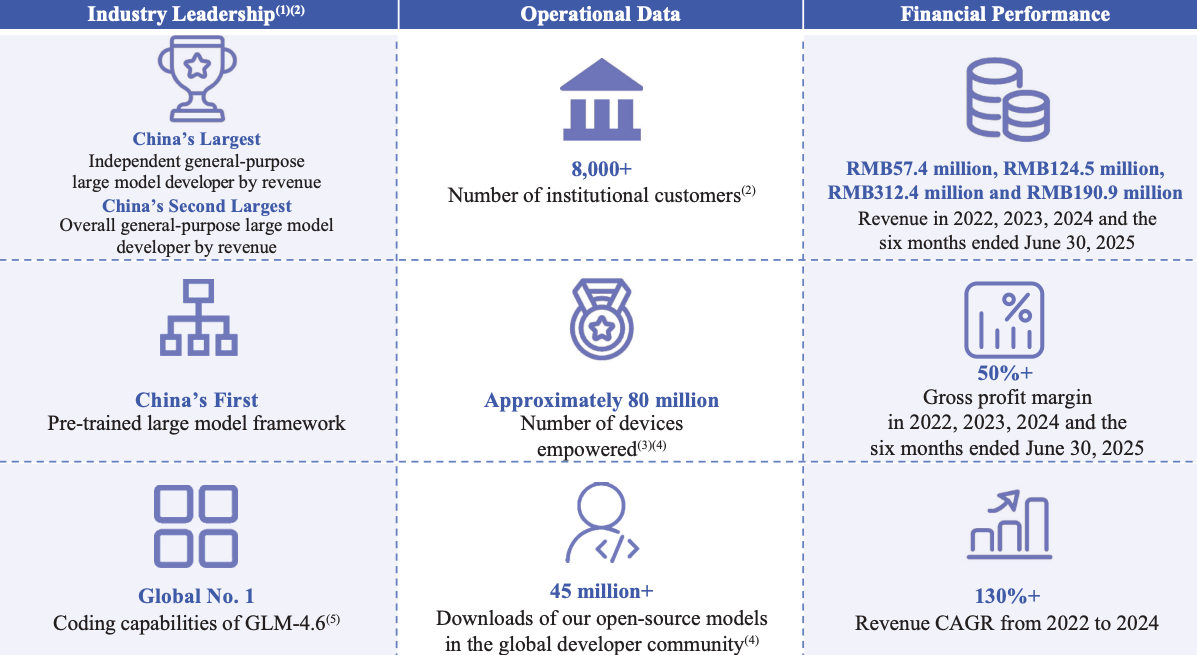

Founded in 2019 with a singular focus on AGI research, Zhipu has systematically advanced China's frontier AI capabilities. The company launched GLM in 2021—the country's first proprietary pre-trained large model framework—and open-sourced GLM-130B in 2022, democratizing access to 100-billion-parameter models. This technical foundation now powers a Model-as-a-Service platform serving over 8,000 institutional customers and approximately 80 million devices.

Zhipu's market position reflects execution at scale. According to Frost & Sullivan, the company ranked first among China's independent developers and second overall in general-purpose large models, capturing 6.6% market share by revenue in 2024. This performance spans both enterprise deployments—private companies and public sector entities—and individual users ranging from end consumers to developers building on Zhipu's APIs.

The company's competitive advantage stems from vertical integration across the AI value chain: foundational research, model architecture, training infrastructure, and commercial deployment operate as a unified system. This enables rapid iteration cycles, customized enterprise solutions, and cost-efficient scaling that fragmented approaches cannot match.

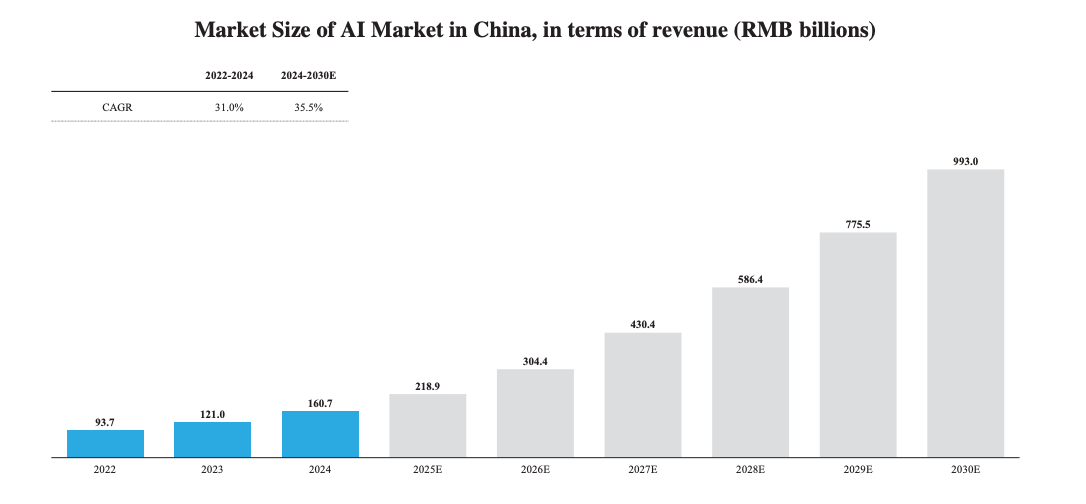

The opportunity ahead is substantial. China's AI market is expanding rapidly as enterprises integrate large models into core operations—from customer service automation to scientific research acceleration. Zhipu operates at this inflection point, positioned to capture demand from organizations seeking domestically developed, commercially proven AI infrastructure. With demonstrated technical leadership, expanding customer penetration, and a clear path from research breakthrough to production deployment, Zhipu is defining how general-purpose AI will be built, deployed, and monetized across China's digital economy.

Introduction

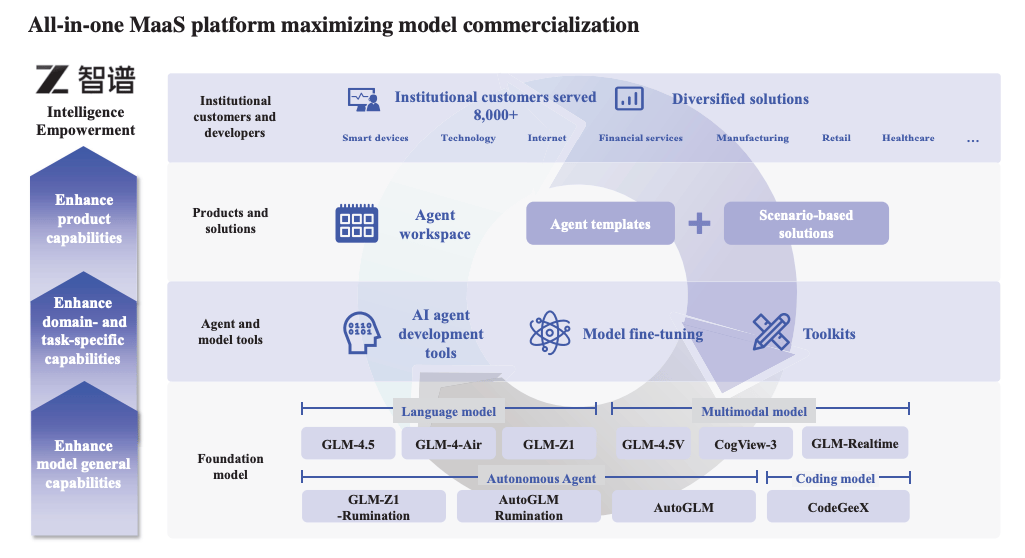

At its core, Zhipu AI is a foundation model company. The company was among the first in China to prove that proprietary large language models could achieve world-class performance—not through imported frameworks, but through original architecture developed entirely in-house. Starting with GLM in 2021, China's first proprietary pre-trained large model framework, Zhipu has built a vertically integrated MaaS platform spanning language models, multimodal systems, coding assistants and autonomous AI agents—all developed upon its 355-billion-parameter GLM-4.5 foundation. By replacing dependency on foreign AI architectures with end-to-end domestic innovation, Zhipu aligns with how intelligent infrastructure should work: independently developed, commercially proven and scalable from research to mass deployment.

This infrastructure powers both reach and reliability. Zhipu's incentives are fundamentally aligned with its customers': the company delivers intelligence through flexible deployment—on-premise for enterprises requiring data sovereignty, cloud-based for those seeking agility. With models compatible across 40+ chip platforms and optimized for everything from mobile devices to enterprise data centers, Zhipu operates at competitive inference costs while delivering superior accuracy. The result is a network-led flywheel: enterprises adopt Zhipu's models for performance, developers build on its open-source releases for accessibility, and device manufacturers integrate its solutions across approximately 80 million endpoints. As of June 2025, over 8,000 institutional customers had deployed Zhipu's technology—demonstrating rapidly accelerating platform adoption.

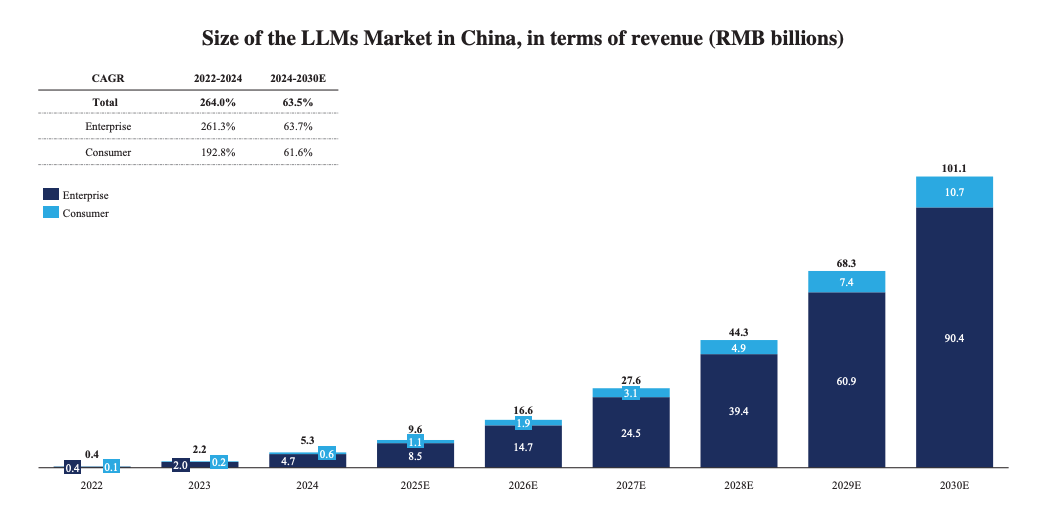

The market shift is foundational. China's LLM market reached RMB5.3 billion in 2024 and is projected to grow to RMB101.1 billion by 2030—a 63.5% CAGR. Zhipu AI is built for this inflection point: ranking first among China's independent large model developers and second overall with 6.6% market share, having achieved over 130% revenue CAGR from 2022 to 2024, and maintaining gross margins exceeding 50%—using proprietary technology purpose-built to become the intelligence layer connecting enterprises, developers and consumers in the next generation of AI-native applications.

History

Zhipu AI began with a direct challenge to China's AI development orthodoxy: world-class general-purpose intelligence could be built domestically, not imported from foreign frameworks. For years before Zhipu's founding, China's AI landscape operated on derivative architectures—adapted versions of overseas models, fragmented research efforts, and limited pathways from academic breakthroughs to commercial deployment. Enterprises waited for foreign vendors to localize solutions. Developers built on borrowed foundations without control over core infrastructure. The ecosystem was dependent, reactive, and constrained by design.

Dr. Liu Debing and Dr. Tang Jie, co-founders with deep roots in Tsinghua University's AI research community, saw an opening. After years advancing knowledge graph technology and natural language processing in academia, they recognized that China needed proprietary foundation models built from first principles. In 2019, Zhipu launched with a thesis that self-developed pre-training frameworks could power China's AI transformation end-to-end, achieving global benchmark performance while maintaining technological independence. The model was intentionally contrarian: build proprietary architecture rather than adapt open-source alternatives, replace fragmented solutions with an integrated MaaS platform, and create unified infrastructure where capabilities compound with every connected customer.

What started as foundational research evolved into vertically integrated AI infrastructure. Zhipu launched GLM in 2021—China's first proprietary pre-trained large model framework—then open-sourced GLM-130B in 2022, proving the technology worked at scale. Product expansion accelerated: from foundation models to multimodal systems, autonomous agents, and coding assistants—with institutional customers growing to over 8,000 by June 2025.

By 2024, Zhipu's MaaS platform reached an inflection point as a unified intelligence layer connecting enterprises, developers, and approximately 80 million devices. More deployments generated more usage data, which improved model performance, which enabled broader applications, which attracted more customers. The infrastructure compounded: customers adopted for language capabilities, expanded for multimodal applications, and scaled across agentic workflows as the platform demonstrated measurable value.

Risk factors

Zhipu AI operates in a rapidly evolving large language model market, where execution risk, regulatory complexity, and competitive intensity can materially impact growth trajectory and profitability. Below are the primary risks associated with Zhipu's business model.

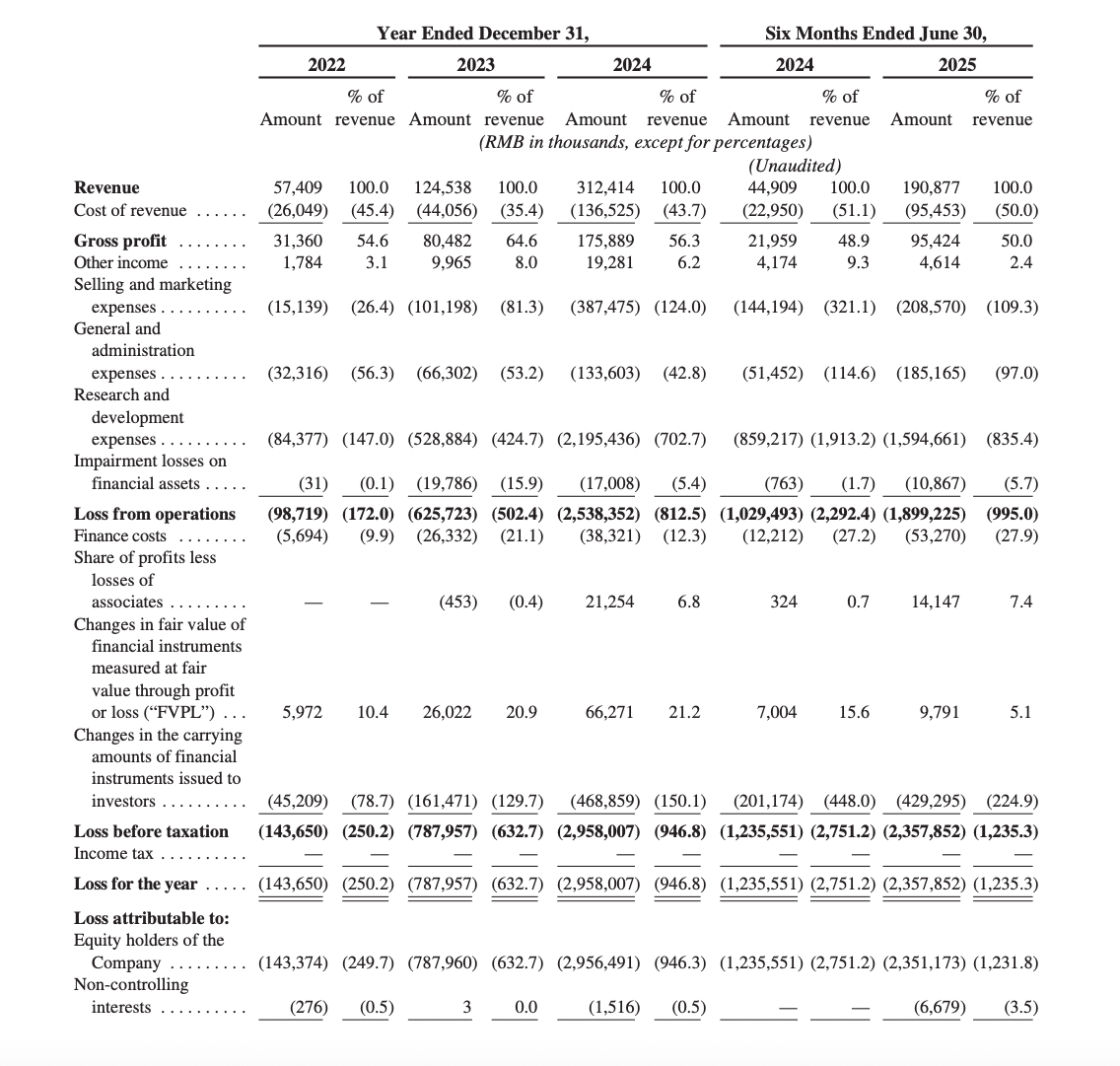

History of Losses and Uncertain Path to Profitability

Zhipu has incurred net losses every fiscal year since inception: RMB144 million in 2022, RMB788 million in 2023, and RMB2,958 million in 2024, with losses of RMB2,358 million in the first half of 2025. Substantial investments in R&D—representing 702.7% of revenue in 2024—and infrastructure scaling continue to pressure margins. While revenue grew at over 130% CAGR from 2022 to 2024, sustained profitability remains uncertain given the capital intensity of foundation model development.

Computing Resource Dependency and Supply Chain Risk

Computing service fees consumed 71.8% of R&D expenses in the first half of 2025. Zhipu relies on third-party providers for high-performance computing resources essential to model training and inference. The January 2025 Entity List addition restricts access to certain U.S.-origin technology, potentially limiting computing hardware availability. Supply disruptions or price increases could materially impact operations and development timelines.

Intense Competition and Market Consolidation

Zhipu competes against well-capitalized non-independent providers—technology giants with established customer bases, diversified revenue streams, and substantial R&D budgets. While Zhipu ranks first among independent developers with 6.6% market share, non-independent competitors hold the top position overall. If cross-sell rates disappoint or models fail to maintain benchmark leadership, competitive differentiation could erode rapidly.

Regulatory Evolution and Compliance Burden

China's AI regulatory landscape is evolving quickly, including the 2023 Measures on Generative AI Services and expanding cybersecurity requirements. New laws may impose additional compliance obligations, restrict certain applications, or require costly infrastructure modifications. Failure to adapt to regulatory changes could result in operational restrictions or penalties.

Customer Concentration Risk

The top five customers represented 40.0% of revenue in the first half of 2025. Loss of major enterprise or public sector customers—due to competitive displacement, budget constraints, or shifting technology strategies—could cause sudden revenue declines.

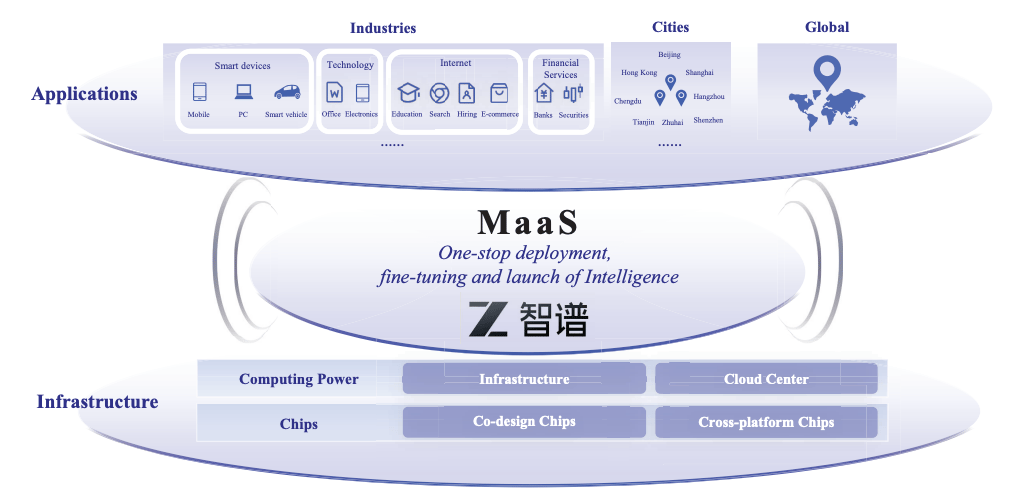

Market Opportunity

The large language model landscape is experiencing a structural inflection point. Zhipu AI addresses a RMB101.1 billion projected market opportunity by 2030—delivering foundation models, multimodal systems, autonomous agents, and coding assistants through a vertically integrated MaaS platform spanning enterprises, developers, and consumer devices.

Displacing Fragmented AI Solutions

Zhipu's primary opportunity lies in replacing disconnected point solutions across enterprise AI adoption—a market that reached RMB5.3 billion in 2024, with institutional customers contributing RMB4.7 billion. Traditional approaches burden enterprises with siloed vendor relationships, limited customization capabilities, incompatible infrastructure requirements, and fragmented deployment workflows.

Zhipu collapses these silos into a unified platform delivering flexible deployment options and compatibility across 40+ chip platforms. Despite serving over 8,000 institutional customers and approximately 80 million devices as of June 2025, Zhipu captures 6.6% market share—leaving substantial expansion room as enterprises adopt foundation models, migrate from fragmented solutions, and consolidate vendors into integrated MaaS infrastructure.

Structural tailwinds persist: Chinese enterprises face mounting pressure to deploy AI across operations, regulatory frameworks increasingly favor domestically-developed technology, and global benchmark performance validates indigenous model capabilities. These dynamics reward platforms delivering measurable ROI through operational efficiency, technological independence, and scalable deployment.

Expanding Across Verticals and Modalities

Zhipu's second opportunity is cross-sell expansion across financial services, smart devices, internet platforms, healthcare, and public sector verticals. Customers adopt for language capabilities, then systematically expand across multimodal applications and agentic workflows as the MaaS platform demonstrates value.

Multimodal models address image generation, video synthesis, and visual comprehension. Autonomous agents automate complex multi-step tasks across digital interfaces. Coding assistants accelerate developer productivity. Each capability extends Zhipu's reach into adjacent use cases and industries.

Building the Intelligence Layer

Zhipu's long-term vision: becoming the foundational intelligence infrastructure for China's digital economy as model performance and platform adoption reach critical mass. Every enterprise deployment, developer integration, and device connection strengthens Zhipu's moat—spanning organizations requiring integrated AI capabilities across language, vision, reasoning, and autonomous action.

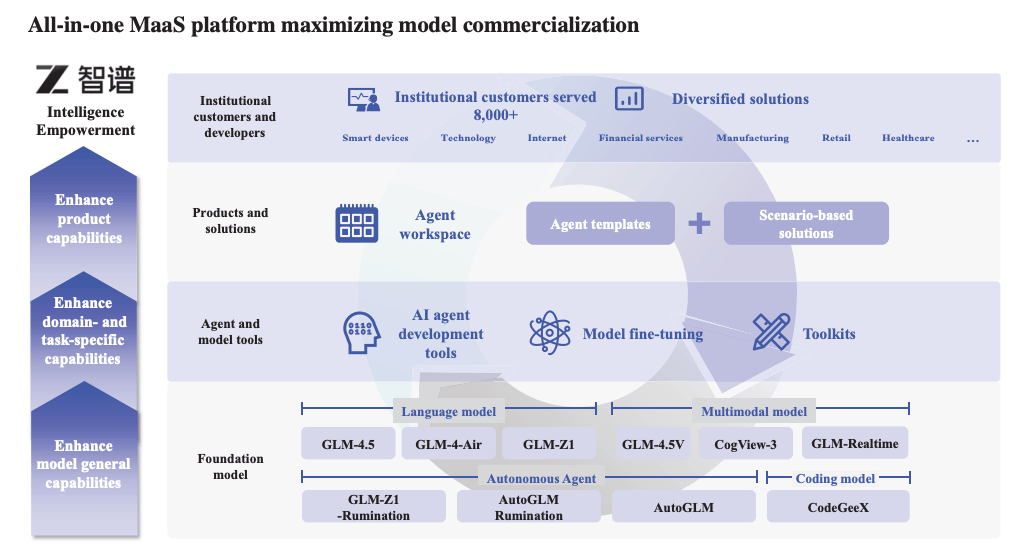

Product

Zhipu's MaaS platform delivers four model categories unified by the GLM foundation—a proprietary architecture connecting language understanding, multimodal cognition, autonomous agents, and code generation. This infrastructure eliminates the fragmented point solutions that burden enterprises with siloed AI vendors, incompatible deployments, and limited scalability.

Foundation Models: Proprietary Intelligence at Scale

Launched with GLM in 2021, Zhipu's foundation models are engineered for benchmark-leading performance with broad infrastructure compatibility. GLM-4.5, the flagship 355-billion-parameter model, ranked third globally and first in China across twelve industry-standard benchmarks in July 2025. GLM-4.6 achieved first place globally on CodeArena for coding capabilities in November 2025. Multi-stage training—pre-training optimization, supervised fine-tuning, and reinforcement learning—ensures consistent accuracy across agentic, reasoning, and coding tasks. The architecture supports deployment across 40+ chip platforms, enabling seamless scaling from mobile devices to enterprise data centers.

Multimodal Models: Visual Cognition and Generation

Zhipu's multimodal portfolio extends foundation capabilities into image, video, and voice processing. CogView generates images from text descriptions. CogVideoX produces video content. GLM-4.5V delivers visual comprehension and reasoning. GLM-4-Voice enables end-to-end voice interaction. GLM-Realtime powers real-time video applications—transforming static language models into systems that perceive and generate across modalities.

AI Agents: From Chat to Autonomous Action

AutoGLM represents Zhipu's evolution from conversational AI to task execution. Designed for autonomous device control through graphical interfaces, AutoGLM achieved state-of-the-art performance on AgentBench. AutoGLM-Rumination combines deep reasoning with operational capabilities for complex multi-step workflows. CoCo delivers enterprise-grade automation across corporate environments.

CodeGeeX: Developer Productivity Infrastructure

CodeGeeX accelerates programming through automated code generation, completion, and workflow optimization—extending Zhipu's platform into the developer tooling market.

Recursive Improvement Architecture

Over 8,000 institutional customers and 80 million connected devices generate continuous usage data. Open-source releases—with 45 million+ global downloads—expand the training feedback loop. This compounding advantage creates barriers competitors cannot replicate: proprietary architecture, diverse deployment data, and cross-modal model integration driving platform expansion as customers adopt foundation models, expand into multimodal applications, and scale across agentic workflows.

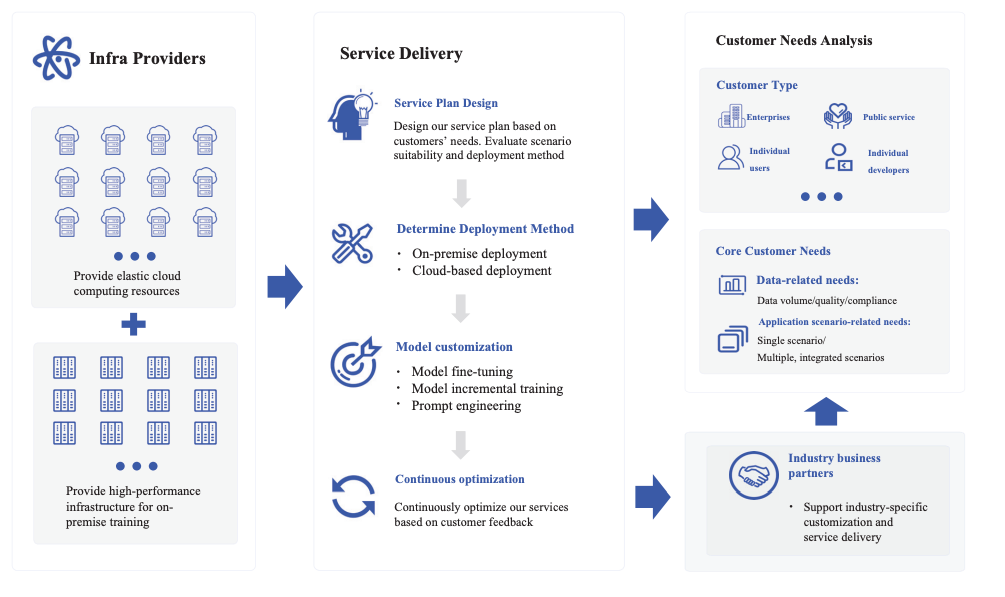

Business Model

Zhipu's model is research-native, commercially proven, and adoption-compounding—built as integrated MaaS infrastructure that delivers measurable intelligence outcomes while reinforcing customer retention through multi-product expansion and the foundation model flywheel.

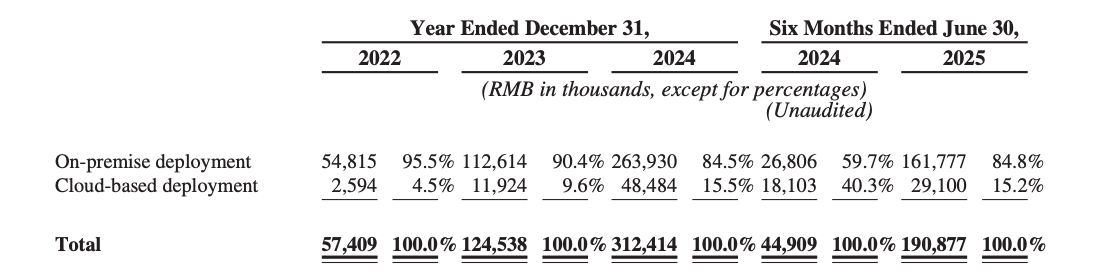

Dual Deployment Architecture

1) On-Premise Deployment

Economics: 84.8% of revenue in the first half of 2025 from enterprise deployments hosted within customer infrastructure. Organizations utilize proprietary data to customize models for specific domains, maintaining full control over performance optimization and data security.

Mix: Financial services, public sector, manufacturing, and enterprises requiring data sovereignty across 8,000+ institutional customers.

Why it matters: High-value engagements with customers demanding customization and compliance. Revenue recognized upon delivery and acceptance, creating predictable project-based income while establishing deep platform dependencies through integration with customer workflows.

2) Cloud-Based Deployment

Economics: 15.2% of revenue from scalable cloud infrastructure eliminating costly local deployments. Subscription-based contracts recognize revenue ratably; usage-based contracts scale with customer consumption through API access.

Why it matters: Strategic land-and-expand mechanism providing low-friction entry for developers and enterprises seeking agility. Cloud deployment drives platform adoption while generating incremental revenue as customers scale token consumption and expand across model categories.

Recursive AI and Data Flywheel

Connected deployments replace fragmentation: Over 8,000 institutional customers and approximately 80 million devices generating continuous usage patterns across language, multimodal, and agentic applications unified through the MaaS platform. More deployments improve model performance—enterprise feedback informing foundation model optimization, developer integrations expanding use case coverage.

Proprietary architecture ensures independence: Full-stack infrastructure combining self-developed GLM framework, 40+ chip platform compatibility, flexible deployment options, and continuous model iteration. Result: Global benchmark leadership and gross margins exceeding 50% while competitors remain dependent on imported frameworks.

Multi-Product Adoption Flywheel Compounds Customer Value

Land with foundation models (demonstrate benchmark performance) → expand into multimodal applications → cross-sell autonomous agents (workflow automation creates immediate ROI) → capture expansion revenue while delivering operational efficiency → reinvest in model improvements and new capability development → deepen retention through MaaS platform dependencies and measurable outcomes.

Management Team:

Dr. Zhang Peng – Chief Executive Officer and Co-Founder

Dr. Zhang Peng has served as CEO and Executive Director since co-founding Zhipu in 2019. He previously conducted research at Tsinghua University's Knowledge Engineering Laboratory, focusing on knowledge graphs and intelligent systems. His technical expertise underpins Zhipu's full-stack MaaS architecture combining proprietary foundation models, multimodal capabilities, and autonomous agent systems. Zhang drives commercial execution across 8,000+ institutional customers and approximately 80 million connected devices. Dr. Zhang holds a Ph.D. in Computer Science from Tsinghua University.

Dr. Liu Debing – Chairman and Co-Founder

Dr. Liu Debing has served as Chairman and Executive Director since co-founding Zhipu AI in 2019. He previously served as Associate Professor at Tsinghua University's Department of Computer Science and Technology, where he led research in knowledge graph technology and natural language processing. His vision to build China's first proprietary pre-trained large model framework established Zhipu's foundational strategy: developing indigenous AI architecture and the GLM foundation as infrastructure for enterprise intelligence. Liu's deep academic roots and research leadership position him to execute recursive model improvement and commercial expansion. Dr. Liu holds a Ph.D. in Computer Science from Tsinghua University.

Dr. Li Juanzi – Non-Executive Director and Co-Founder

Dr. Li Juanzi has served as Non-Executive Director since co-founding Zhipu in 2019. She serves as Professor at Tsinghua University's Department of Computer Science and Technology, specializing in knowledge engineering and semantic web research. Her academic leadership strengthens Zhipu's research capabilities and talent pipeline through university partnerships. Dr. Li's expertise in knowledge representation supports foundation model development and enterprise applications. Dr. Li holds a Ph.D. in Computer Science from Tsinghua University.

Investment

Zhipu's financing trajectory signaled methodical validation—from academic research foundation to China's leading independent large model platform. Founded in 2019 with deep roots in Tsinghua University's AI research community, the company raised early-stage capital to build proprietary pre-training architecture, establishing technological differentiation in China's nascent LLM market.

Momentum accelerated through model evolution. Eight Pre-IPO funding rounds financed GLM framework development (launched 2021), GLM-130B open-source release (2022), and the integrated MaaS infrastructure connecting foundation models, multimodal systems, and autonomous agents. As revenue scaled from RMB57 million (2022) to RMB312 million (2024)—a 130%+ CAGR—investor confidence grew around proprietary architecture and enterprise adoption economics.

The inflection arrived as Zhipu demonstrated commercial traction with institutional customers growing to over 8,000 by June 2025 and approximately 80 million connected devices. Gross margins exceeding 50% validated platform unit economics, while benchmark leadership—ranking first among China's independent developers and second overall with 6.6% market share—proved competitive positioning. This capital fueled expansion across financial services, smart devices, internet platforms, healthcare, and public sector verticals, plus international operations in Southeast Asia.

With institutional backing spanning growth equity and strategic investors totaling over RMB8.36 billion raised, Zhipu financed a vertically integrated, research-native stack powering language models, multimodal cognition, autonomous agents, and coding assistants. The result is a capital-intensive but scalable operating model aligned to network effects, reaching RMB191 million revenue in the first half of 2025 while investing toward long-term infrastructure dominance across China's projected RMB101 billion LLM market by 2030.

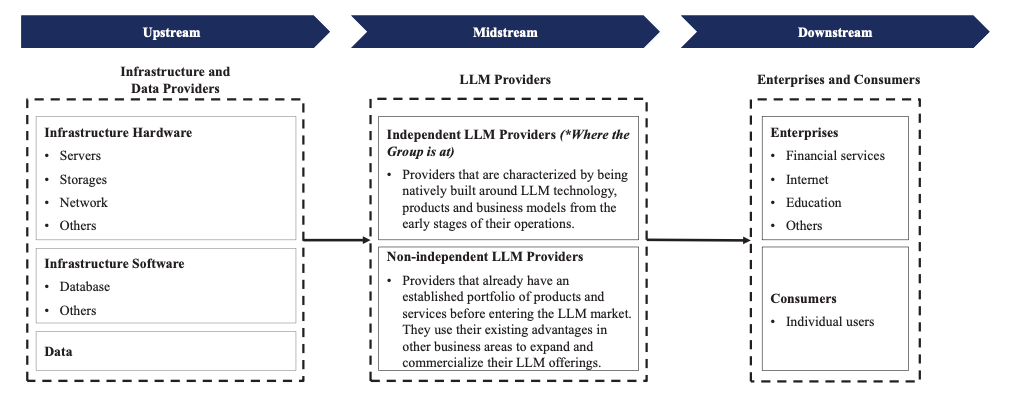

Competition

Competitive Landscape: Integrated Platform vs. Fragmented Solutions

China's LLM market is divided between independent developers and non-independent providers—technology giants leveraging existing customer bases versus pure-play AI companies built natively around foundation model innovation. Zhipu takes a different approach: a research-native, vertically integrated MaaS platform unifying foundation models, multimodal systems, autonomous agents, and coding assistants under proprietary GLM architecture.

The Obvious Competition:

Non-Independent Providers — Technology giants branching into AI hold the top market position, with established enterprise relationships, diversified revenue streams, and substantial R&D budgets. However, enterprise customers may resist adopting AI from competitors operating in their core markets, creating openings for independent providers offering neutral infrastructure.

Independent Developers — Emerging LLM companies compete for enterprise adoption with varying approaches to model development, deployment flexibility, and vertical specialization. Zhipu ranks first among independent developers with 6.6% market share, but competition for talent, computing resources, and customer relationships remains intense.

International Players — Global AI leaders offer benchmark-leading models, but face regulatory constraints, data localization requirements, and limited domestic infrastructure compatibility versus Zhipu's 40+ chip platform support and flexible deployment options.

Legacy AI Vendors — Traditional enterprise software providers offer point solutions for specific use cases, but lack foundation model capabilities, multimodal integration, or agentic automation versus Zhipu's unified platform.

How Zhipu Competes

Zhipu's moat is built around proprietary architecture and multi-product expansion:

Full-stack infrastructure: Self-developed GLM framework → continuous model iteration → 40+ chip compatibility → flexible deployment

MaaS platform: Connects foundation models, multimodal systems, agents, coding tools—enabling cross-capability automation impossible with point solutions

Expansion economics: 50%+ gross margins; 8,000+ institutional customers; 80 million connected devices; 130%+ revenue CAGR

Competition remains intense from well-capitalized technology giants and emerging independents, but Zhipu's research-native, recursively improving, multi-capability platform converts market fragmentation into sustainable competitive advantage.

Financials

Zhipu's financial profile reflects foundation model development scaling toward commercial viability: accelerating revenue growth, expanding institutional penetration, and maintaining strong gross margins powered by platform adoption. Ongoing investments in R&D infrastructure influenced loss levels, but the business added enterprise customers, deepened deployments, and demonstrated path to sustainable unit economics.

Growth at Scale

Revenue: RMB312M (2024), +151% YoY from RMB124M; +117% to RMB124M (2023) from RMB57M (2022). First half 2025: RMB191M, +325% YoY from RMB45M.

CAGR: 130%+ from 2022 to 2024—demonstrating rapid commercial traction.

Mix: On-premise deployment 84.8% of revenue (H1 2025); cloud-based deployment 15.2% (growing from subscription and usage-based models).

Profitability: Net loss RMB2,958M (2024) versus RMB788M (2023); RMB2,358M in H1 2025. Adjusted net loss (non-IFRS) RMB2,466M (2024); RMB1,752M (H1 2025)—reflecting R&D investment intensity.

Ecosystem Momentum

Customer Expansion: Institutional customers grew to 8,000+ (June 2025), spanning financial services, smart devices, internet, healthcare, and public sector verticals.

Device Reach: Approximately 80 million devices empowered across smartphones, PCs, and smart vehicles—demonstrating consumer-scale deployment.

Market Position: First among China's independent LLM developers; second overall with 6.6% market share by 2024 revenue.

Margin Structure

Gross Margin: 54.6% (2022), 64.6% (2023), 56.3% (2024), 50.0% (H1 2025)—maintaining 50%+ margins while scaling infrastructure.

R&D Investment: RMB2,195M (2024), 702.7% of revenue; RMB1,595M (H1 2025), 835.4% of revenue—reflecting foundation model development intensity.

Cost Composition: Computing services 71.8% of R&D expenses (H1 2025); payroll costs declining as percentage—demonstrating infrastructure leverage.

Closing thoughts

Zhipu AI's financial performance and strategic positioning underscore its potential to redefine how Chinese enterprises operate in an era of AI transformation, technological independence, and digital acceleration. With a research-native, vertically integrated platform, Zhipu has differentiated from fragmented vendors and foreign-dependent solutions while building infrastructure spanning proprietary GLM architecture, multimodal capabilities, and autonomous agent systems.

Bull Case: Zhipu's foundation model leadership provides runway for sustained growth. The company serves 8,000+ institutional customers, empowers 80 million devices, and maintains 50%+ gross margins while achieving 130%+ revenue CAGR. Market position—first among independent developers, second overall—validates competitive differentiation. Addressable market scales to RMB101B by 2030 with substantial penetration headroom.

Bear Case: Intense competition from well-capitalized technology giants holding top market position. Accumulated losses and R&D intensity (702.7% of 2024 revenue) create profitability uncertainty. Computing resource dependency and Entity List restrictions amplify supply chain risk. Regulatory evolution may impose compliance burdens.

Success hinges on maintaining technological leadership as competition intensifies, executing multi-product expansion across enterprise verticals, scaling internationally, and converting R&D investments into sustainable profitability. If executed, Zhipu is positioned to capture infrastructure economics as China's digital economy consolidates around domestically-developed, commercially-proven AI platforms.

Brad Harrison is a former Army Ranger turned venture capitalist, and the founder and Managing Partner of Scout Ventures.

In this conversation, Brad and I discuss:

-Scout focuses on the intersection of critical tech and national security. What’s a technology today that the broader VC market is still sleeping on?

-What’s one thing the startup ecosystem doesn’t understand about working with the government?

-What’s Brad’s take on 3%–5% ownership in early-stage rounds today – can that still drive meaningful outcomes?

If you enjoyed our analysis, we’d very much appreciate you sharing with a friend.

Tweets of the week

Here are the options I have for us to work together. If any of them are interesting to you - hit me up!

Sponsor this newsletter: Reach thousands of tech leaders

Upgrade your subscription: Read subscriber-only posts and get access to our community

Buy my NEW book: Buy my book on How to value a company

And that’s it from me. See you next week.

Reply